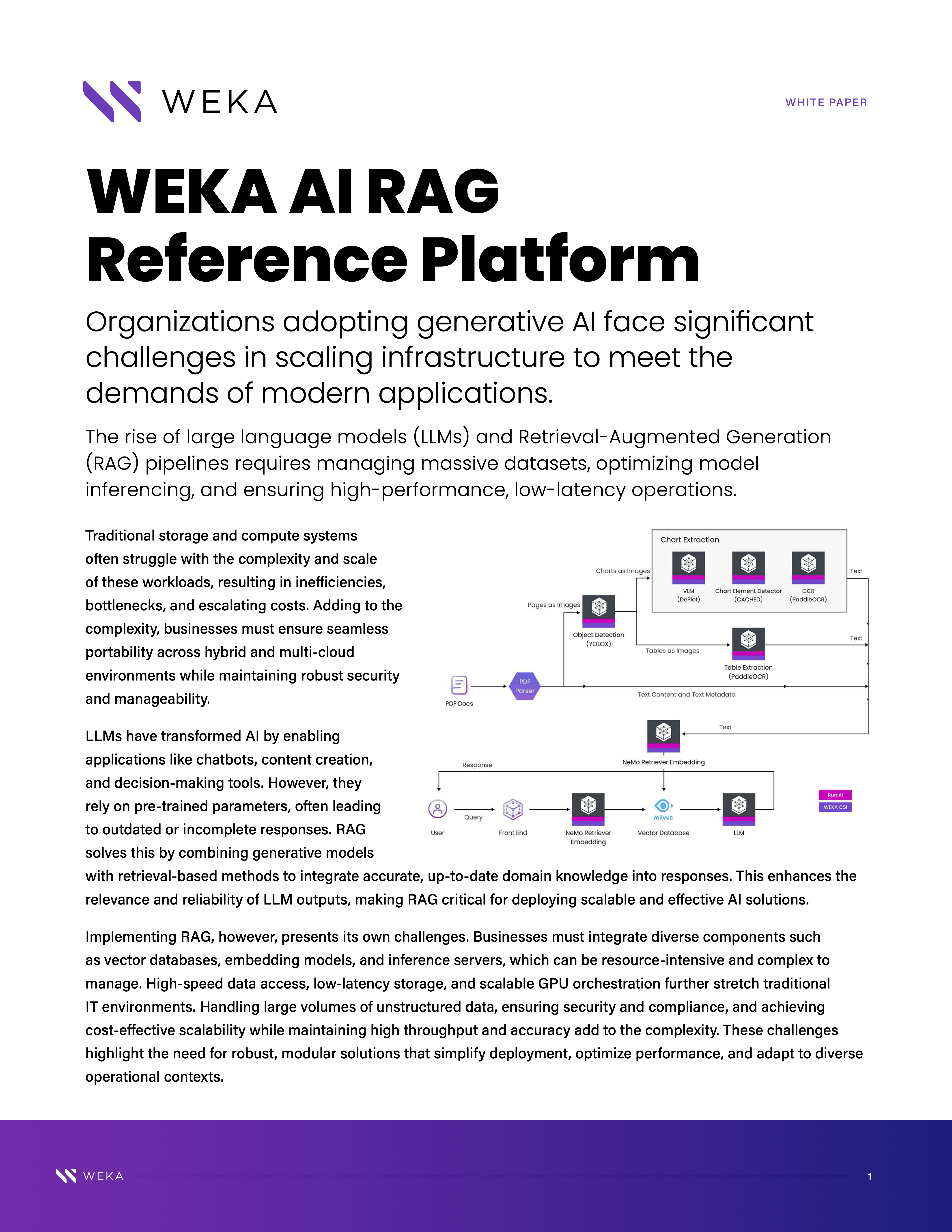

The rise of large language models and Retrieval-Augmented Generation pipelines presents significant challenges in scaling AI infrastructure. Traditional systems struggle with inefficiencies and escalating costs, hindering seamless operations across environments. This whitepaper offers a transformative solution to streamline AI processes and enhance performance.

Built on the WEKA Data Platform, this white paper showcases cutting-edge AI infrastructure design, leveraging NVIDIA's suite and Run:ai for optimal efficiency and scalability.

In this whitepaper, you'll learn how to:

- Optimize AI Infrastructure: Streamline data flow and reduce latency for efficient, scalable AI operations.

- Enhance Performance Metric: Improve Time to First Token and Cost Per Token for better system performance.

- Leverage Advanced Technologies: Integrate tools like Milvus and NVIDIA for accelerated inferencing and robust AI solutions.